project Anywhere

Wireless VR framework for real-time full-body interaction. ETH Zurich, 2014

The ultimate display would, of course, be a room within which the computer can control the existence of matter.

(Ivan E. Sutherland, The Ultimate Display 1965)

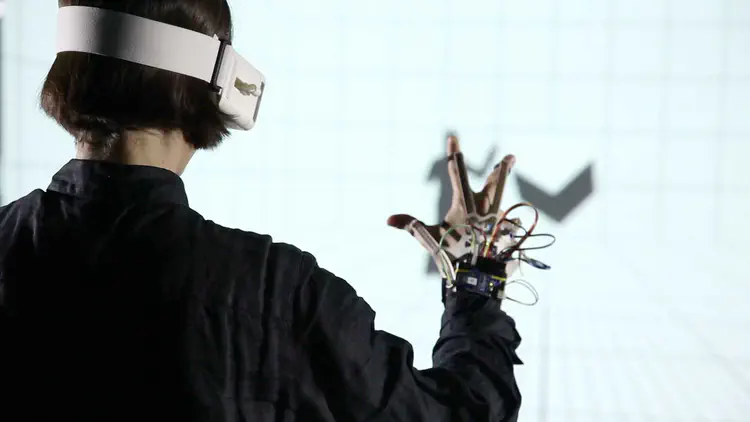

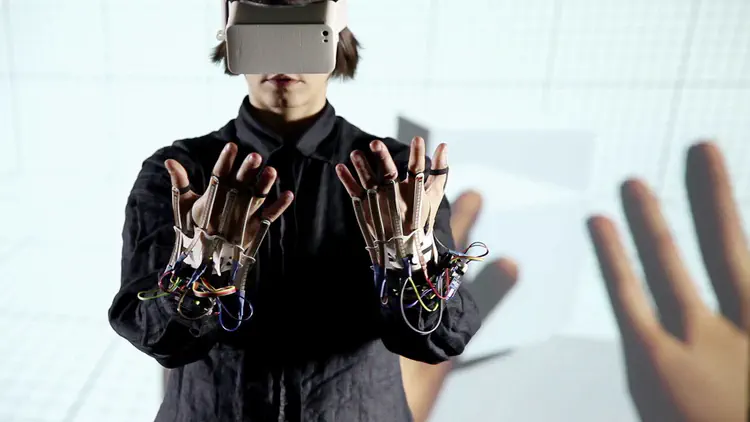

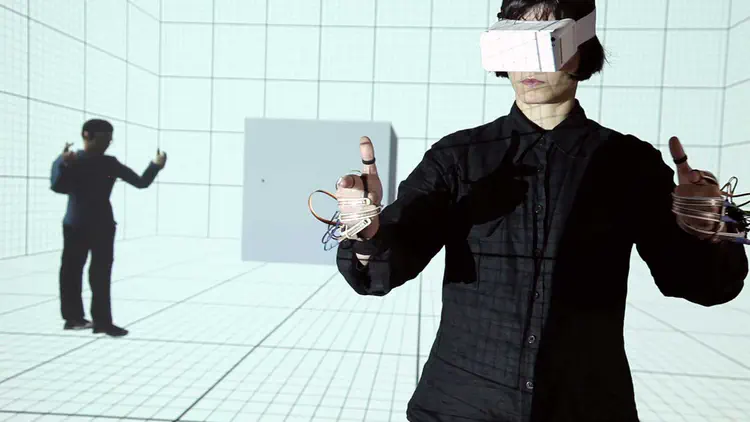

project Anywhere is a framework and proof-of-concept prototype for full-body interactive wireless VR, developed at the Chair for CAAD, ETH Zurich in 2014, to investigate notions of virtual space and time, and to explore designing synthetic spatiotemporal sensory experiences. The project was initiated in mid-2014, following the release of the first commercial VR devices that featured limited bodily involvement and capacity for movement. Addressing these limitations, this project developed a VR framework consisting of a mobile application, custom wireless VR HMDs and data glove prototypes as well as skeleton tracking, to produce a vivid real-time interactive presence in a virtual reality environment In that, project Anywhere demonstrates the potential of virtual space as a valid architectural medium in itself (instead of its use as an intermediary form for design or visualization), that allows experimenting with synthetic kinaesthetic, visual and auditory sensory experiences. Concurrently it explores the capacity of designing spatiotemporal environments with an embedded dimension of time. The project’s title, “Anywhere”, refers to the generic nature of the digital-virtual environment that can be made to simulate any physical context: real or imaginary.

Video demo

Project description

The project consists of the following bespoke software and hardware prototypes:

- a mobile multiplayer VR app,

- Omnimask: a 3D printed mobile VR head-mount ,

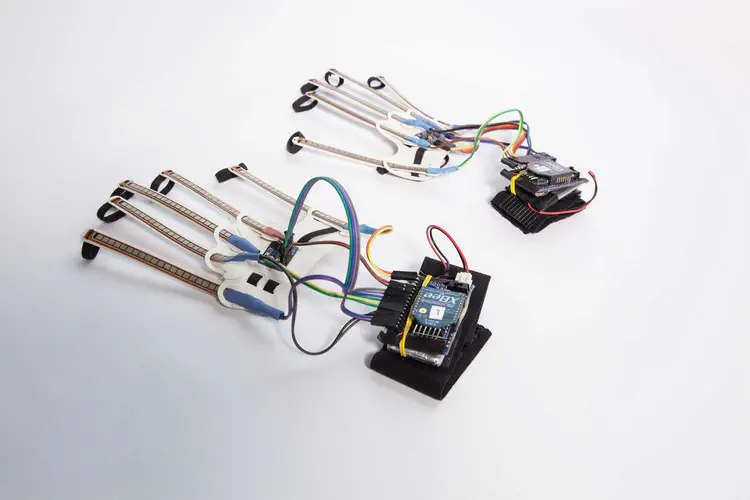

- Inteligloves: a pair of custom wireless data gloves, and

- Omnitracker: a sensor fusion software.

The main component of the project is a custom mobile application (1) developed in Unity as a multiplayer VR videogame. Mounted on a VR mask (2), the app provides a stereoscopic viewport for each individual user, through the point of view of their avatar in the shared virtual environment.

Each user’s spatial presence is tracked in real-time by their head-mount, data gloves, and a Kinect skeleton tracking sensor. Cumulatively, head, gesture and skeleton tracking provide 86 degrees of freedom, fused together by the Omnitracker (4), a software written in Java/Processing. These data are synchronized with a web cloud and are use to animate the user’s avatar in real-time (see image13).1 Thus, any movement of the subject in physical space, such as walking, or even head tilting and finger movement correlates 1:1 to movements of their avatar.

Hardware prototypes developed for this project include a 3D printed VR head-mount (2) and a pair of data gloves (3).

The VR head-mount was designed for an iPhone 5, and featured adjustable inter-pupillary distance, and focal length.

The data gloves (3) were developed using Arduino microcontrollers. Each glove features an XBEE transceiver module, a 9 DoF sensor, and up to 6 flex sensors (for tracking individual finger movement). These were mounted on a lightweight elastic 3D printed glove base, easily adjustable for different hand sizes.

The framework was programmed to recognize hand gestures, used for performing various contextual virtual actions (see for example image 8), proving that a virtual presence can also be an active one.

Related publications

- Constantinos Miltiadis (2016). Project Anywhere: An Interface for Virtual Architecture. IJAC 14 (4).

- Constantinos Miltiadis (2015). Virtual Architecture in a Real-time, Interactive, Augmented Reality Environment - project Anywhere and the potential of Architecture in the age of the Virtual. eCAADe 2015. TU Delft.

- Photon Engine Dev Story (2015). Project Anywhere – Digital Route to an Out-of-Body Experience

Awards

- Ivan Petrovic Prize for the “best presentation by a young researcher” for the paper “Virtual Architecture in a Real-time, Interactive, Augmented Reality Environment”. eCAADe 2015, TU Vienna, September 2015.

- Second place Zeiss VR One Contest for Mobile VR Apps, 2015.

- Arthur C. Clarke prize for “the most creative and unorthodox approach”. Museum of Science Fiction international architectural competition, 2014.

Press

- The Guardian & The Observer Tech Monthly (2015)

Project Anywhere: digital route to an out-of-body experience : Constantinos Miltiadis’s project combines inteligloves and Kinect sensors to an Oculus Rift-style headset to provide the ultimate in impressive digital experiences. - Euronews High Tech; Euronews Next (2015)

Euronews Next. Project Anywhere: an out-of-body experience of a new kind Proyecto Anywhere, una nueva forma de jugar en línea - hi-tech [Spanish] - Reuters (2015)

Project Anywhere takes virtual reality gaming to new level - DesignBoom (2014)

Subjects manipulate project anywhere’s virtual universe in real-time - Museum of Science Fiction (2014)

The preview museum: A departure from museums as usual… - Fubiz (2014) Virtual Universe in Real-time [French]

Exhibitions & installations

- Demonstration at the Carl Zeiss Headquarters, in the context of the Zeiss VR One App Contest. Oberkochen, Germany, June 2015

- Public installation at TEDxNTUA. Athens, 17.01.2015

Project information

project Anywhere was developed as a postgraduate master thesis in 2014 at the Chair for CAAD, ETH Zurich, Prof. Ludger Hovestadt. It was first demonstrated at the Chair for CAAD in November 2014.

Credits

Concept and development

Constantinos Miltiadis

Prototyping support

Demetris Shammas & Achilleas Xydis

Video credits

Filming & Editing

Demetris Shammas

Photo documentation

Achilleas Xydis

Music

Michalis Shammas

Subject

Anna Maragkoudaki

Images

For a discussion of the networking topology of this project see the 2015 Photon Engine Dev story: Project Anywhere – Digital Route to an Out-of-Body Experience. ↩︎