Decoded Language

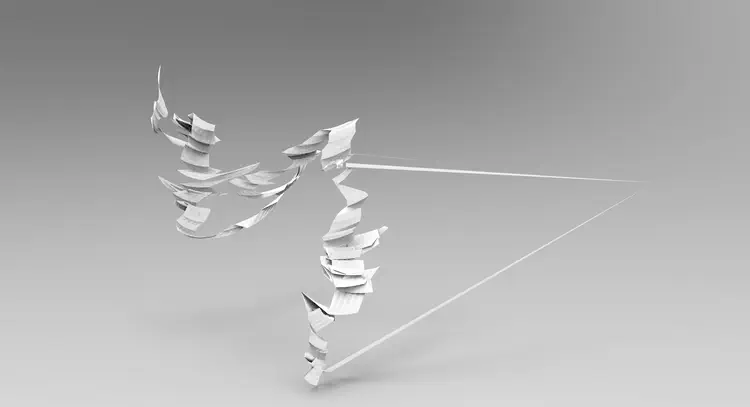

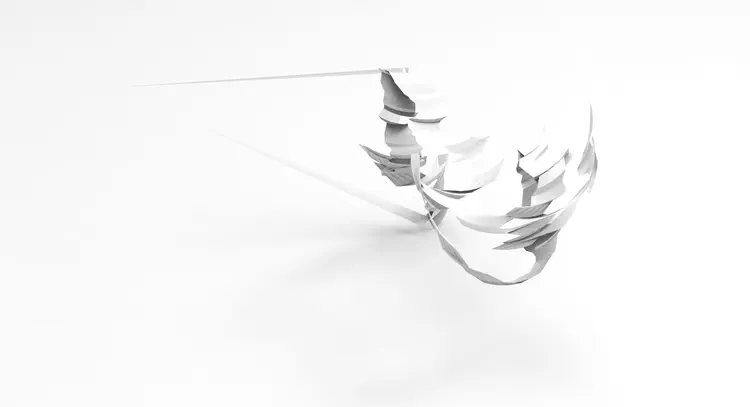

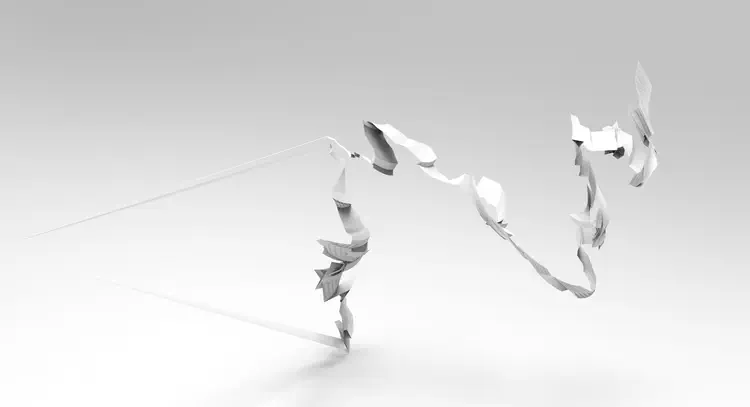

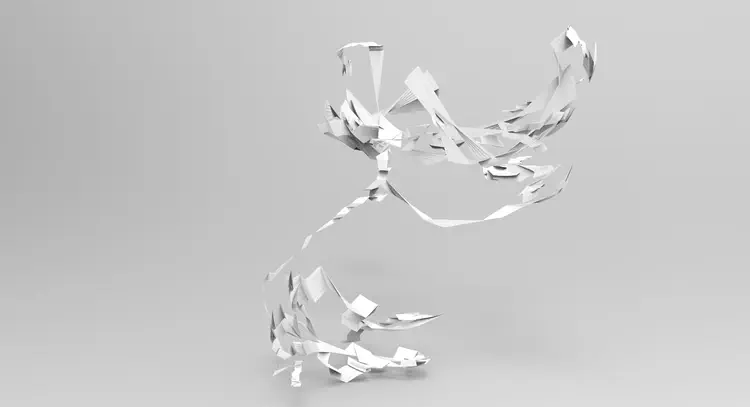

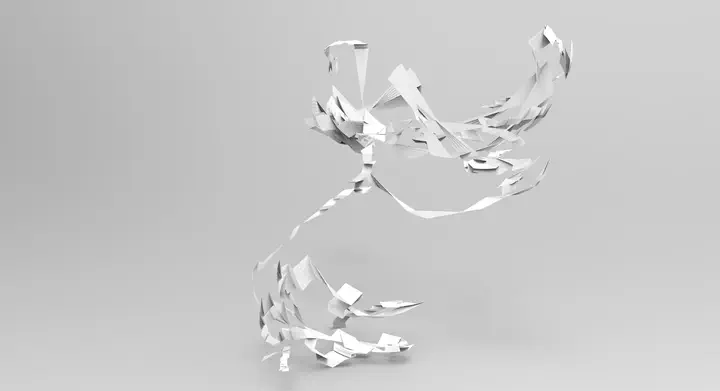

3D rendering of audience performance

3D rendering of audience performanceDecoded language is a multi-user interactive sound installation for Supercollider and Kinect V2.

The installation uses real-time body (skeleton) tracking as interface for controlling a virtual granular synthesis instrument.

This way, the installation produces an entanglement of the spatial domain of bodily movement with the temporal domain of sound.

Therefore, the installation invites the audience to create spatiotemporal choreographies and experiece how spatial movement correlates to temporal manipulations of the originally linear dimension of listening.

The interaction interface was designed to be intrinsic and relative to one’s body rather than absolute. Therefore absolute positions are irrelevant, only body configurations, relative displacements and rotations matter.

Finally, all interaction data are recorded and are visualized geometrically at the end of each session, offering a visual-spatial representation of the temporal manipulations of the listening timeline (see images below).

The installation uses audio sampled from Saul Williams’ spoken word performance of coded language at Def Poetry Jam (2004).

Project information

decoded language was developed between late-2018 and early-2019 at the Institute of Electronic Music (IEM), University of Music and Performing Arts, Graz, with the support of Prof. Marko Ciciliani and Prof. Daniel Mayer.

The installation consists of an algorithmic synthesizer developed in Supercollider, and a 3D tracking and visualization software written in Processing, synchronized with each other via OSC.

The work was first presented at the IEM CUBE Concert on January 24, 2019.

A previous version of the installation, developed with Pure Data and Kinect V1, was presented in December 2017 at the Institute of Architecture and Media, TU Graz.